Introduction

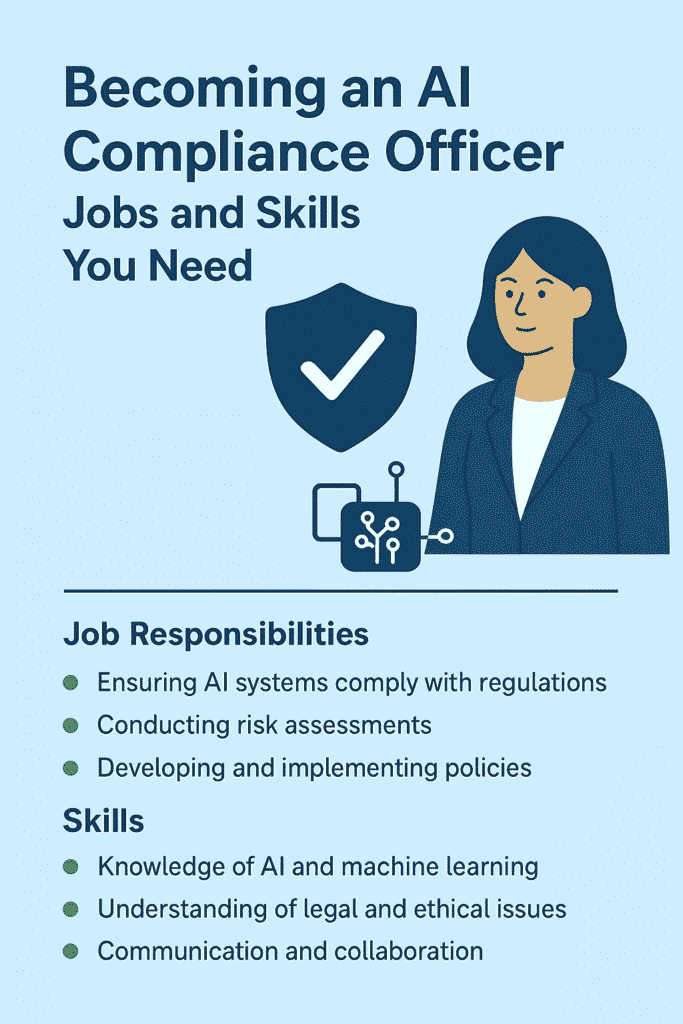

Artificial Intelligence (AI) is transforming industries, from finance and healthcare to transportation and creative arts. With this rapid growth comes growing concern: How can we ensure AI systems are used responsibly, ethically, legally, and safely? That’s where the role of AI Compliance Officer (sometimes called AI Ethics & Compliance Officer, AI Governance Officer, Responsible AI Officer, etc.) becomes crucial.

An AI Compliance Officer sits at the intersection of technology, law/regulation, and ethics. They ensure organizations using AI do so in ways that respect individual rights, comply with laws, manage risk, protect reputation, and build trust with users and stakeholders.

Table of Contents

1. What Does an AI Compliance Officer Do?

To know what you need, you must first be clear: what does the job look like in practice?

Here are the typical responsibilities, duties, and expectations:

Core Responsibilities

- Regulatory Awareness and Legal Compliance

Stay abreast of laws, regulations, and standards relevant to AI. This might include:- Data protection laws (e.g. GDPR in Europe, CCPA in California, various national privacy laws)

- Emerging AI-specific regulations (like the EU AI Act)

- Sector-specific laws (e.g. healthcare, finance, telecommunications)

- Standards and frameworks (ISO/IEC, NIST, etc.)

- Ethics & Fairness

AI systems can unintentionally introduce bias, discriminate, violate human rights, or produce unfair outcomes. The AI Compliance Officer must oversee policies, audits, and controls to ensure fairness, non-discrimination, and alignment with ethical principle - Risk Assessment & Management

Identify risks associated with AI deployment—legal, operational, reputational, ethical. Evaluate impact, probability, and devise mitigation plans. Often this includes risk-tier classification of AI systems (e.g. distinguishing high-risk vs low-risk) under regulation like the EU AI Act. - Data Governance & Privacy

Ensuring that data used for AI (training, testing, inference) is collected, stored, processed, shared, and secured in compliance with laws and best practices. Ensure privacy, data minimization, anonymization/pseudonymization, etc. - Transparency, Explainability & Model Accountability

Many regulations or guidelines call for AI systems to be explainable (so stakeholders can understand decisions), and for development processes to be auditable and documented. The compliance officer often has to ensure the organization maintains documentation, audit trails, and that models can explain their outputs. - Policy & Governance Frameworks

Creating, implementing, maintaining internal policies, standards, guidelines for AI development and deployment. This includes creating governance structures (e.g., oversight committees, ethics boards), establishing roles & responsibilities. - Monitoring, Audits & Reporting

Regular monitoring of AI systems in production to detect drift, bias, performance degradation, misuse, or non-compliance. Conduct internal or external audits. Report to leadership, possibly to regulators. Keep up with regulatory changes and ensure organizational readine - Training & Stakeholder Engagement

Educate internal stakeholders (developers, product managers, executives, even non-technical staff) about AI compliance, ethics, risks. Foster a culture of responsible AI. Also liaise with external stakeholders like regulators, customers, auditors. - Incident Response & Remediation

In case of breaches, ethical failures, bias discovered in deployed AI, privacy violations, etc., the compliance officer leads or coordinates responses: investigating, containing harm, fixing issues, communicating, possibly reporting. - Continuous Improvement

Since AI technology and regulation evolve fast, an AI Compliance Officer must ensure the organization adapts: updating policies, improving controls, incorporating new technical solutions, anticipating changes in laws.

Variations Based on Context

Depending on the industry, size of organization, geography, and maturity of AI adoption, the role may differ:

- In smaller orgs or startups, the AI compliance role may be part-time or merged with roles like legal, data governance, or product management.

- In large companies, especially in regulated sectors, there might be full teams under this role.

- Geographical regulations differ: what’s required under EU law may differ in the U.S., Asia, India, etc. So duties vary depending on location.

- The planning horizon may differ: some organizations are reactive (responding to regulation), others are proactive (building governance ahead of regulation).

2. What Skills & Competencies Are Needed

To perform the above responsibilities well, you need a broad mix of skills: legal/regulatory, technical, ethical, and interpersonal. Here’s a breakdown.

Technical / Domain Knowledge

- Understanding AI / Machine Learning Basics

- How AI models are trained, validated, tested, deployed, monitored

- Understanding of algorithmic bias, data sets, feature selection, overfitting, generalization, explainability techniques (e.g. SHAP, LIME)

- Understanding of model lifecycle: versioning, drift, retraining, robustness

- Data Governance, Security, and Privacy

- Knowledge of data lifecycle: collection, storage, retention, deletion

- Techniques for anonymization / de-identification / pseudonymization

- Data security best practices: access control, encryption, audits

- Understanding of privacy laws globally (GDPR, CCPA, etc.) and regionally relevant laws

- Regulatory, Legal & Standards Knowledge

- Deep familiarity with relevant laws and regulation in your jurisdiction(s) (e.g. data protection, AI-specific regulation)

- Awareness of industry standards and frameworks (ISO/IEC standards, NIST, etc.)

- Knowledge of audit frameworks and compliance testing procedures

- Risk Assessment & Management

- Risk identification: legal, ethical, systemic, reputational, operational risks

- Risk measurement: impact, likelihood, severity

- Mitigation strategy development

- Monitoring risk over time

- Ethics & Philosophy

- Knowledge of ethical theories (consequentialism, deontology, virtue ethics) helps in making judgments about fairness, harm, bias

- Understanding social context: fairness, bias, non-discrimination, equity

- Awareness of human rights, moral responsibility

Soft Skills & Interpersonal Competencies

- Communication Skills

- Ability to explain complex technical or legal ideas to non-experts (product teams, management, etc.)

- Writing clear policies, reports, executive summaries

- Verbal communication: presentations, training, stakeholder discussions

- Collaboration & Cross-functional Work

- Working with data scientists, engineers, legal, product, operations, etc.

- Building consensus, negotiating trade-offs (e.g. between speed of product release vs risk)

- Analytical Thinking & Problem-solving

- Ability to dig into technical details to find where things could go wrong

- Critical thinking to assess risk, interpret regulation, determine what’s required

- Attention to Detail

- Small omissions in data collection or documentation can lead to regulatory non-compliance or ethical issues

- Meticulous audits, document tracking, version control, validation

- Ethical Judgment and Integrity

- Being able to make sound ethical decisions even in grey zones

- Strong personal and professional ethics, commitment to doing what’s right

- Adaptability & Learning Mindset

- Regulations, AI methods, and public expectations change rapidly

- You must continuously learn, adapt, and update knowledge

- Project & Process Management

- Planning compliance programs, timelines

- Tracking progress, coordinating audits/training

- Budgeting, resourcing

- Cultural Sensitivity and Global Perspective

- If working across jurisdictions, understanding cultural norms, local regulations, language, etc.

- Being aware of how risk perception differs by region

3. Education, Certifications, and Experience

What will make your resume more credible and help you grow in this path?

Formal Education

- A bachelor’s degree is usually required; relevant fields include Law, Computer Science, Data Science, Ethics, Philosophy, or Information Security

- A master’s degree can be a plus – e.g., in AI / ML, Data Privacy, Law, or Ethics

- Interdisciplinary programs are especially helpful – those combining law, technology, and philosophy / ethics

Certifications & Specialized Training

Certifications help you demonstrate your knowledge, especially for employers who may not yet fully understand what “AI compliance” entails.

- EXIN AI Compliance Professional (AICP): Part of a career path including foundations in information security (ISO/IEC 27001) and privacy & data protection

- AICCI AI Compliance Officer (AICO): Teaches risk assessment, conformity processes, alignments with AI governance frameworks like ISO 42001.

- Certifications in privacy/data protection (e.g. CIPP [Certified Information Privacy Professional], CIPM, etc.), ISO standards, or those more focused on security or risk management

- Ethics and AI courses: universities, online platforms offering courses in AI ethics, bias, transparency etc.

- Staying certified in related fields like cybersecurity (if relevant), data governance, or information security

Experience

- Working in compliance, legal, data privacy, risk management, or governance functions gives a foundation. Even roles like compliance analyst or data privacy analyst help.

- Exposure to AI/ML projects: being part of teams that build or deploy AI helps you understand technical considerations and pitfalls.

- Working in regulated industries (healthcare, finance, government) can give experience with compliance frameworks, audits, etc.

- Participating in internal policy creation, audits, risk assessments, and being hands-on with governance work.

4. Job Titles, Variants, and Industry Sectors

Because the field of AI compliance is relatively new, different companies label roles differently. Also, the sector you are in makes a difference.

Common Job Titles

- AI Compliance Officer

- AI Ethics & Governance Officer

- Responsible AI Officer

- AI Policy & Compliance Lead

- AI Governance and Risk Specialist

- AI / ML Regulatory Affairs Officer

- Data Privacy and AI Governance Manager

Industries That Hire

These roles are becoming relevant in many sectors, but some are more active/deeping regulated than others:

- Technology / Software / SaaS – companies building AI/ML products

- Finance & Banking – for example, use of AI in lending, fraud detection, credit scoring

- Healthcare & Biotech – diagnostic AI tools, patient data privacy etc.

- Regulated Industries generally – insurance, telecommunications, energy, agriculture etc.

- Public Sector / Government – regulators, national AI policy, public service AI deployment

- Consumer Products / Retail / E‑Commerce where AI recommendation engines, user profiling are used

Geographical & Regulatory Differences

- In the EU, tight regulations coming (or already in force) such as the EU AI Act, GDPR, etc.

- United States has a patchwork of laws (federal, state-level), sectoral regulation, and increasing interest in AI-specific rules

- Countries in Asia, Latin America, Africa have varying levels of regulation; some are still building AI governance frameworks

5. How the Job Market Looks

What are the prospects, challenges, and what do employers look for?

Demand & Growth Trends

- As regulation catches up with AI, companies are increasingly being required by law (or anticipating laws) to have someone responsible for AI compliance.

- The push for “ethical AI” from consumers, investors, and civil society is creating reputational risk if you don’t have compliance and governance frameworks.

- More organizations are doing audits of their AI models, setting up AI ethics boards, integrating compliance into product lifecycle.

Skills vs Supply Gap

- There are relatively few people right now with strong blends of legal/ethical knowledge and technical AI/ML understanding. This is a strength if you develop both.

- Employers may struggle to find people who can translate regulation into tech / process changes.

Salary & Seniority

- Because it’s a newer field, titles, responsibilities, and compensation vary a lot depending on region, company size, industry, experience.

- Senior roles (e.g., in big tech, finance) often come with high responsibility (e.g. overseeing global AI systems) and higher compensation. Entry/mid-level roles are smaller in scope.

Example Job Profiles

Some job postings reveal what companies expect. For example:

- Drafting & summarizing policy documents, impact on stakeholders, tracking compliance obligations under regulations, preparing staff‑trainings, participating in technical discussions with AI engineers.

- Developing internal AI governance frameworks; ensuring documentation and audits; ensuring technical documentation; regulatory change tracking.

6. Certifications & Frameworks You Should Know

Learning official frameworks and certifications helps both in knowledge and credibility.

Key Certifications

| Certification | What You Learn / Why Valuable |

|---|---|

| EXIN AI Compliance Professional (AICP) | Hands‑on ability to assess AI systems for transparency, bias, regulatory risk; helps implement EU AI Act, NIST AI frameworks, ISO/IEC risk, etc. EXIN |

| AICCI AI Compliance Officer (AICO) | Teaches risk assessment, legal requirement interpretation, conformity & AI governance frameworks like ISO 42001. aicci.org |

| Privacy/ Data Protection Certifications (e.g. CIPP, CIPM, etc.) | Core knowledge of privacy laws, which underpin many AI compliance risks. |

| Information Security / Cybersecurity Certifications (e.g., ISO 27001) | Good for understanding security risks in AI systems & data governance. EXIN’s path starts with ISO 27001 foundation. |

| Ethics / Responsible AI Courses | From universities or professional bodies—helps your ethical reasoning, awareness of bias/fairness etc. |

Frameworks & Standards to Know

- EU AI Act: One of the most advanced AI laws. Includes risk-tier classification of AI systems (e.g. “high-risk”) and mandates for conformity, documentation, accountability.

- GDPR, CCPA, and other data protection laws: They apply to data used by AI systems.

- ISO / IEC standards: Particularly those about risk management, data quality, AI governance (e.g. ISO/IEC 42001 is emerging as AI governance standard).

- NIST AI Risk Management Framework (RMF): Provides a framework for managing risk throughout AI lifecycle.

- Industry‑specific regulatory frameworks (e.g. in healthcare, finance, etc.)

7. How to Prepare: Roadmap to Becoming an AI Compliance Officer

Here’s a suggested roadmap—steps you can take, from zero or modest experience, to building up to a strong AI compliance role.

Phase 0: Self‑Assessment & Deciding If It Suits You

Ask yourself:

- Do you enjoy both technical and legal/ethical work? You’ll often need to bridge between AI teams and legal / policy teams.

- Are you comfortable with uncertainty, ambiguity, grey areas? Many ethical issues aren’t black & white.

- Do you have patience for policy work, documentation, audit, compliance processes (which can sometimes feel bureaucratic)?

- Do you like continual learning, staying on top of tech + law + social concerns?

If yes, you may find this role fulfilling.

Phase 1: Build Foundation

- Educational foundation

- If possible, get a degree in relevant field (law, ethics, computer science, data science, or cross‑disciplinary).

- Take coursework relevant to AI, ML, data privacy, ethics.

- Learn the law & regulations

- Deep dive into privacy laws that apply in your country & globally (GDPR etc.).

- Study AI‑specific regulation (if any) in your region + in regions your organization works with (e.g. EU, US, UK).

- Technical exposure

- Learn basics of AI/ML: what is model training, bias, evaluation, explainability.

- Take online courses.

- Experiment with simple ML projects to understand data, features, model evaluation etc.

- Ethics & philosophy

- Read about AI ethics: fairness, bias, accountability, transparency, human rights.

- Understand prevailing ethical frameworks & debates.

- Develop soft skills

- Communication: practice writing, speaking, explaining technical/legal content to non‑experts.

- Analytical thinking, problem solving.

- Certifications / training

- Pick up relevant certifications (privacy, AI governance, information security).

Phase 2: Gain Practical Experience

- Work in related roles

- Roles in compliance, data governance, legal, product policy, quality assurance, risk management.

- Even internships or volunteering in these areas help.

- Participate in AI projects in your organization

- Get exposure to data pipelines, model building, testing, deployment.

- Ask to be involved in ethics reviews, risk assessment, audit of AI/ML systems.

- Build a portfolio

- Document projects where you were involved in compliance, privacy, data governance, risk management.

- Possibly write blogs, papers, or contribute to open source / community work in AI governance.

- Networking & mentoring

- Join communities focused on AI ethics, AI regulation, compliance.

- Follow thought leaders, attend webinars/conferences.

- Find mentors who are doing similar work.

Phase 3: Enter the Field & Grow

- Apply for roles that bridge gaps

- Compliance analyst / data privacy roles with AI or technical tilt.

- AI policy researcher, ethics analyst.

- Demonstrate impact

- In early roles, try to deliver tangible things: audit reports, risk assessments, policies, bias detection reports, training materials etc.

- Specialize where appropriate

- Choose an industry sector (e.g., healthcare, fintech). Deep knowledge of sector-specific regulation can be a differentiator.

- Or specialize in a sub-domain like explainability, bias / fairness auditing, privacy, technical audit.

- Stay current—Continuous Learning

- Subscribe to regulatory updates (e.g. changes in law, guidance documents)

- Learn about emerging AI technologies (transformer models, generative AI, reinforcement learning) and what new risk they bring

- Keep sharpening both legal/ethical skills and technical skills

- Aim for senior roles

- Be prepared to lead teams, design organization‑wide AI governance frameworks, engage with boards / executives, oversee large scale risk programmes, maybe even represent organization externally (to regulators, public).

8. Challenges & What to Watch Out For

Being an AI Compliance Officer is rewarding but comes with real challenges. Knowing them helps you navigate better.

- Regulation still evolving / uncertain

- Many jurisdictions are still drafting AI regulation; guidance documents may be vague. You’ll often work in ambiguity.

- Technology advances quickly

- New models (e.g., large language models, generative AI) introduce risks that may not have established best practices. Keeping up is tough.

- Trade‑offs & conflicts

- Between innovation/speed to market vs compliance/risk control

- Between what’s ideal vs what’s feasible (e.g. explainability vs model performance)

- Cross‑disciplinary friction

- Tech teams might see compliance as slowing them down. Legal/regulation folks might be technical or slow. Bridging culture gaps is stressful.

- Resource constraints

- Many organizations don’t have big budgets for governance, ethics teams. You may have limited tools or staffing.

- Global & jurisdictional complexity

- If a company operates across multiple geographies, complying with different laws/regulations (sometimes conflicting) is hard.

- Correcting mistakes

- Once AI is deployed and causing harm (e.g. biased outcomes, privacy leak), remediation is hard: may need significant model retraining, data cleaning, even legal liability.

- Transparency vs secrecy

- Sometimes companies don’t want to reveal details of AI models for competitive reasons; but compliance and trust may require more transparency.

- Ethics not always visible in short‑term ROI

- Ethical and compliance work often shows its benefit later (avoiding lawsuits, reputational damage) rather than generating immediate revenue, so getting buy‑in from leadership sometimes difficult.

- Bias in your own approach

- It’s possible to be blind to some ethical issues or biases; especially if you come from one region, culture, background. Need to be aware of diversity, inclusion, external perspectives.

10. Sample Job Description (What Employers Expect)

To make things concrete, here’s a hypothetical job description combining many of the elements above. Use this as a template when evaluating your skills or applying for jobs.

Sample Role: AI Compliance Officer

Location: [City, Country]

Reports to: Head of Legal / Head of Governance / Chief Risk Officer

Key Responsibilities:

- Stay current on AI‑related laws, regulations, guidelines, and standards (e.g., EU AI Act, national laws, data protection regulations).

- Develop, implement, and maintain AI governance frameworks and internal policies ensuring responsible, ethical, transparent AI practices.

- Conduct risk assessments for AI projects (pre‑ and post‑deployment), identifying ethical, legal, reputational, and operational risks.

- Ensure data privacy, security, and data governance practices are enforced across AI models (training, inference, sharing).

- Review AI model pipelines for possible bias, fairness, discrimination; perform audits of model behavior.

- Oversee documentation, technical documentation, audit trails, versioning for AI systems.

- Manage compliance programs: internal audits, monitoring, remediation, stakeholder reporting.

- Provide training and awareness programs to internal teams on AI compliance, ethics, model explainability, responsible AI.

- Serve as a liaison with regulators, external auditors, partners, and vendors to ensure compliance.

- Respond to incidents (e.g. data breaches, misuse, ethical complaints), investigate and lead corrective actions.

- Measure compliance performance through KPIs, metrics; propose improvements.

Qualifications & Skills:

- Bachelor’s or Master’s degree in Law, Computer Science, Data Science, Information Security, Ethics, or similar.

- Certifications in AI compliance, privacy/data protection, or information security.

- Solid understanding of AI/ML principles; familiarity with model training, bias, explainability.

- Deep knowledge of data protection laws (GDPR, etc.) and AI‑specific regulation.

- Experience in risk assessment, audits, policy or governance.

- Excellent communication skills; ability to translate regulation/ethics to technical teams and executives.

- Strong analytical, problem‑solving, attention to detail.

- High ethical standards, integrity, ability to handle ambiguity.

11. How to Position Yourself: Resume & Interview Tips

When applying or interviewing, here’s how to present yourself well.

Resume Tips

- Highlight multidisciplinary experience: e.g., projects where you worked with legal + tech + product teams.

- Quantify achievements: e.g., “Performed risk assessment of 5 AI/ML models, identified and reduced bias by X%,” “Created data governance policy used across 3 product lines,” etc.

- Include relevant certifications and trainings.

- Mention tools or methods you know (e.g. explainability tools, privacy preserving techniques, audit tools, risk frameworks).

- Emphasize soft skills: communication, ethics, ability to manage stakeholders.

Interview Preparation

- Be ready to speak about past situations where you dealt with compliance, risk, or ethics: what challenges, how you handled them.

- Understand the company’s industry and what regulations apply; show you’ve done your homework.

- Be ready to talk technically enough to show you understand AI/ML trade‑offs (e.g. explain a scenario where bias might creep in, or where explainability could conflict with model complexity).

- Be ready with suggestions: what compliance frameworks you might implement, how you’d structure a governance program, how you’d measure success.

- Demonstrate ability to balance innovation and risk—not being a blocker, but enabling responsible innovation.

12. Typical Career Path & Progression

How might your career progress if you move in this field?

| Stage | Usual Roles | Focus / Responsibilities | What to Learn or Build |

|---|---|---|---|

| Entry / Early | Compliance Analyst, Data Privacy Analyst, Policy Analyst, Junior AI Ethics / Governance roles | Support risk assessments, audits, policy drafting, monitoring, maybe small AI project exposure | Technical basics of AI/ML, data privacy law, documentation, ethics fundamentals |

| Mid‑Level | AI Compliance Officer, Governance & Risk Specialist, AI Ethics Lead | Own parts of governance program, lead risk assessments, more strategic, more cross‑team work, influencing product/AI design | Deeper regulation knowledge; managing stakeholders; designing frameworks; auditing; maybe team leadership |

| Senior | Head of AI Compliance, Director of Responsible AI, Chief AI Ethics & Compliance Officer | Own organizational policies, global regulation compliance, liaise with board, represent company externally, set strategic direction | Leadership, understanding of global legal landscape, ability to foresee regulatory changes, manage large programs and budgets |

| Specialist Tracks | Fairness / Bias Auditor, Explainability Specialist, Privacy / Security in AI, AI Risk Quantitation Specialist | Focused deep expertise in a sub‑domain | Technical mastery, research, possibly contribute to standards, publications etc. |

13. Salary & Compensation Expectations

While it depends a lot on location, industry, company size, and seniority, here are some general observations:

- Entry / early roles may pay less than roles focused purely on engineering; but in regulated industries (finance, healthcare), even entry roles in compliance can carry decent pay.

- Mid‑level AI compliance officers in large tech/finance companies often have higher compensation due to risk and responsibility.

- Senior roles, particularly overseeing global compliance or in companies with large AI systems deployed, often come with high compensation + bonuses + possibly stock options.

- In many non‑US countries, compensation will be lower in nominal USD terms but adjusted for cost of living, industry norms etc.

If you want, I can pull together estimated salary bands for your region (India / Asia) so you have realistic expectations.

14. Key Challenges & Ethical Dilemmas

To succeed, you need to anticipate and manage some common dilemmas, and develop strategies for them.

- Explainability vs Performance

Some models achieve high performance (accuracy) at the cost of being opaque. How much explainability is needed? Is a trade‑off acceptable? - Bias and Fairness

Data may reflect societal bias. Defining what fairness means in context is complex (equal opportunity, equal outcome, predictive parity, etc.). - Privacy vs Utility

More data often improves model performance. But data privacy laws / user expectations might restrict data collection or usage. - Regulation vs Innovation

Over‑guarding can slow progress or lead to less competitive products. Under‑governing exposes risk. Finding balance is key. - Transparency vs Proprietary/Competitive Secrecy

Companies may be reluctant to expose model internals, datasets, etc., for IP or business competition reasons—but regulators / users may need transparency. - Global Differences

Laws and expectations differ by country. What’s acceptable in one place may be illegal or unethical in another. - Emergence of new AI capabilities

E.g. generative AI, large language models, deepfake risk, synthetic media: bring new compliance concerns that may not yet have settled norms.

15. Practical Tips & Best Practices

These are concrete things you can do or advocate for, to be effective as an AI Compliance Officer.

- Start compliance early in AI project lifecycles; don’t wait until after deployment.

- Embed compliance / ethics reviews into product development cycles (design, data collection, model development, deployment).

- Maintain good documentation: data provenance, model training, versioning, validation results, audit trails. Even seemingly small details matter.

- Use regular audits (internal or external) to verify model behavior, bias, drift etc.

- Implement metrics and KPIs: track fairness, error rates, model drift, privacy incidents etc.

- Have clear communication channels between AI teams, legal/regulatory teams, leadership.

- Build or foster an ethics/gov board or committee to review new AI projects, raise concerns.

- Use tools, techniques for explainability, bias detection, privacy preserving (differential privacy, federated learning etc., where applicable).

- Keep up with regulation: newsletters, legal updates, webinars, law firm publications.

- Be proactive: anticipate upcoming regulation; propose compliance frameworks rather than being reactive.

16. Real‑World Example / Case Studies (Hypothetical + Known Trends)

While specific company case studies are often confidential, here are illustrative examples & trends:

- A fintech company deploying AI for credit scoring implemented fairness audits: checking whether protected groups (race, gender, etc.) are being unfairly disadvantaged. They found bias in training data. They retrained with different sampling techniques and introduced fairness constraints, then documented changes and included explainability tools in their app.

- Healthcare AI system: patient diagnosis using AI tool. Data privacy was a concern: patient data anonymization, data sharing across institutions, model explainability, ensuring that model decisions can be understood by clinicians.

- Tech startup offering AI‑driven advertising targeting: regulatory risk around user profiling, privacy law violation. The company created data privacy policies, data collection disclosures, opted‑in consent flow, impact assessments.

- Organizations using generative AI (e.g. text/image generators) are being asked to document whether outputs might infringe copyright, may produce misinformation, or be biased. Compliance officers are beginning to create policies around dataset curation, prompt monitoring, usage disclaimers.

17. Sample Learning Resources

Here are books, courses, organizations, and resources to help you build knowledge.

- Online courses: e.g. “AI Ethics”, “Responsible AI”, “Data Privacy” courses from Coursera, edX, Udacity.

- University programs or extension courses in Data Privacy, AI Governance, Ethics (look for interdisciplinary programs).

- Certifications (as already mentioned: EXIN, AICCI etc.)

- Reading materials:

- “The Ethical Algorithm” by Michael Kearns & Aaron Roth

- “Weapons of Math Destruction” by Cathy O’Neil

- Research papers & journals on algorithmic fairness and AI transparency

- Blogs, newsletters, organizations working in AI ethics / compliance: e.g. Partnership on AI, AI Now, Future of Life Institute, IEEE’s initiatives, etc.

- Legal/regulatory bodies’ publications (EU Commission, national data protection authorities) for rules/guidelines.

18. Applying This to Your Context (India / Asia / Other Regions)

Since laws, norms, business culture, regulation differ by country, here are things to consider for someone in India (or similar regions), or wanting to work globally:

- India is gradually developing AI regulation; when that happens, early entrants into AI compliance roles may have advantage.

- Data privacy law in India (e.g. the proposed Data Protection Bill) may become relevant. Being aware of such bills, draft regulations helps.

- Understand local laws (labour, consumer protection, privacy), and also international laws if you work for global companies or with international data.

- Cultural expectations (e.g. around privacy, bias) may differ; what is “fair” or “transparent” may have different social meaning.

- Companies in India may have fewer resources for full governance / ethics teams, so roles may require wearing multiple hats.

- Remote work / global compliance: many companies hire across countries; knowledge of international regulation is increasingly important.

20. Action Plan: 30/60/90 Days If You Want to Transition

If you already have some background and want to move toward an AI compliance role, here’s a plan for the first 90 days:

| Time Frame | Goals / Actions |

|---|---|

| Days 1‑30 | Perform self‑assessment: strengths / gaps in technical, legal, ethical areas. Start studying regulation relevant to your region (and globally). Enroll in an online course in AI ethics or AI regulation. Begin reading papers/blogs in AI governance. |

| Days 31‑60 | Get hands on: if possible at your current job, volunteer for any AI or data/privacy/compliance initiatives. Try a small project: maybe audit a model (if available), document data lineage, do a risk assessment. Connect with mentors or communities in AI governance. Earn one certification. |

| Days 61‑90 | Flesh out a portfolio: write up project(s), maybe blog about one. Apply for roles or internal lateral moves in compliance/data governance with AI angle. Sharpen soft skills: presentation, policy drafting. Start preparing for interviews. |

Conclusion

Becoming an AI Compliance Officer is a fulfilling and increasingly essential role in the tech ecosystem. It offers you a chance to shape how AI is used—in ways that are safe, fair, ethical, and legal. The path requires multidisciplinary learning, ethical conviction, technical awareness, legal understanding, and the ability to work across teams.

If you equip yourself with the right mix of skills (legal, technical, ethical, interpersonal), gain relevant experience (compliance, privacy, AI/ML exposure), and stay curious and proactive, you can build a strong career in this space.

follow us on whatsapp channel